Abusive Twitter post remains live, even after Twitter agrees it breaks rules

Social media sites including Twitter have faced considerable backlash recently over the way they have handled abusive content proliferating on their platforms, with many describing such sites as breeding grounds for hate.

And if recent reports are anything to go by, Twitter especially still have a long way to go to curb a problem that only seems to be getting worse, as many have told stories of the platform keeping patently abusive content live even after it have been reviewed, reported and found to be hateful.

For example, in the aftermath of the Euro 2020 football game featuring England and Germany, a number of abusive tweets were uploaded to Twitter including a photo of a young German girl crying after her team’s defeat (screenshot below but faces have been blurred out) that was attached to a barrage of hateful and xenophobic insults, some of which we have had to also blur out for being too graphic.

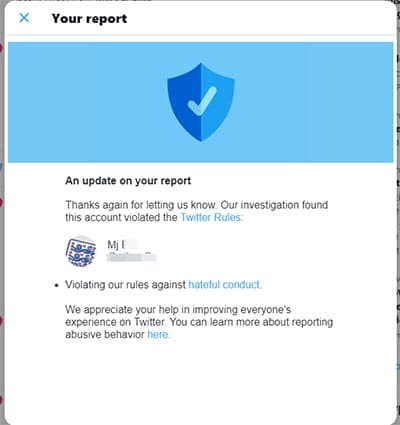

The tweet above was reported on the same day it was published on June 29th 2021. Twitter users who reported the tweet claim they did not get the results of their reports until 10 days later.

And despite Twitter agreeing that the content was indeed hateful and broke their rules (below) the tweet above was still not taken down. As we write this article, the tweet is still live, as is the Twitter account that posted it.

This is one of many examples of Twitter’s unbalanced approach to removing content. The platform has been under the spotlight since being accused of letting content spread that led to the January 6th Capitol Hill attack soon after the 2020 Presidential election in the United States.

Sponsored Content. Continued below...

The Southern Law Poverty Center recently warned that unless Twitter improves its own ability to identifying and removing certain types of content, that more politically motivated violence, fueled in part by Twitter, is inevitable.

Yet the platform has also been accused of automatically banning users for using the monkey emoji, despite the tweets themselves not being related to any type of abuse, including suspending the account of a reporter from the UK’s Independent media outlet.

Sponsored Content. Continued below...

It is still unclear why that emoji content was being automatically identified and removed by Twitter and their users suspended, while other types of clearly abusive content (including the content pointed out at the start of this article) remained (and indeed still remains) accessible on the platform even after being reviewed and found to have been in violation of Twitter’s own rules regarding hateful content.

These stories, all of which are from the last handful of weeks, highlight a platform that is still struggling to grapple with enforcing its own rules despite years of the same complaints being directed towards them.

And it highlights a platform that is still likely to be on the receiving end of the now-standard criticisms that have plagued them for some time now, with no sign of meaningful change on the horizon.

Continued below...

Thanks for reading, we hope this article helped, but before you leave us for greener pastures, please help us out.

We're hoping to be totally ad-free by 2025 - after all, no one likes online adverts, and all they do is get in the way and slow everything down. But of course we still have fees and costs to pay, so please, please consider becoming a Facebook supporter! It costs only 0.99p (~$1.30) a month (you can stop at any time) and ensures we can still keep posting Cybersecurity themed content to help keep our communities safe and scam-free. You can subscribe here

Remember, we're active on social media - so follow us on Facebook, Bluesky, Instagram and X