Facebook still totally inept at purging scams, even after they’re reported

When talking about online scams to the public, to the media or in front of congressional hearings, Facebook’s official and typically apologetic stance is that they take scams extremely seriously and always move diligently to purge them from its platform. And they call on their users to report scams when encountered to help in that goal.

But the reality to almost anyone “on the ground” is somewhat different. In fact, Facebook only seems to act upon a fraction of reports made by ourselves and the network of communities aimed at helping the social network identify and remove a whole host of pages, profiles, comments and posts that clearly violate Facebook’s own terms of service concerning fraud and misinformation.

And Facebook’s inability to remove scams from its platform appears systemic, failing to act on a whole host of different scammers and scams that plague its platform. From fake profiles luring victims to spammy marketing webpages to counterfeit retailers using Sponsored Ads to force their scams down the throats of users. From get-rich-quick scams misusing the identities of celebrities to romance scammers looking to hoodwink Facebook users of their savings.

Not only do Facebook allow such scammers do infiltrate their platform in the first place, in all those cases above Facebook has also refused to remove these scammers even after they were reported to Facebook’s own moderation team.

Sponsored Content. Continued below...

A case in point, let’s look at a comment made by “Ryan”.

We discussed only a few days ago how a plethora of different scammers have been turning their attention to the comments made on viral Facebook posts made by pages. (The sorts of posts that ask for trivial engagement such as “tag a friend who…” etc. etc.) Ryan was one such scammer who posted the same message to dozens of female users asking them to add him as a friend.

To anyone with even a cursory knowledge of online scams, this is certainly a romance scammer. Targeting users of the opposite sex. Requests to connect on social media. Identical messages sent to multiple users. And Ryan was using a stolen profile and cover photo belonging to a member of the armed forces.

Romance scams are one of the most devastating online scams that plague the Internet. It’s a scam that sees a scammer develop an online “relationship” with a victim, only for the scammer to begin asking for money. It is one of the most individually devastating scams since they can fleece large amounts of money from a single victim. Not only that, but such scams can be extremely psychologically damaging.

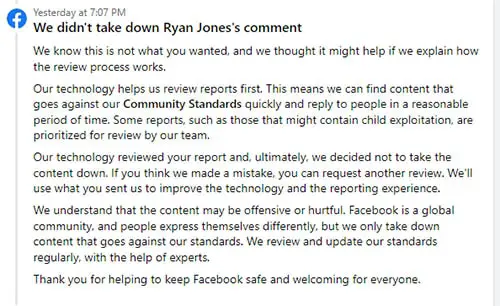

After we reported Ryan’s comment, Facebook came back a day later claiming their “technology” reviewed the comment, and it was deemed not to be in violation of their terms of service. A mistake, obviously, but the only recourse on offer was an appeal. The appeal came back equally unsuccessfully after it too was reviewed by Facebook’s technology.

Right in front of our eyes one of the most potentially dangerous and unscrupulous types of online scammer seeking their next victim. Not hiding in the shadows but perfectly visible in the light of day. Operating with an apparent immunity.

Facebook’s “technology” reviewed the post and deemed it acceptable.

These are not isolated incidents. With increasing frequency inside the large network of fellow Facebook users working to remove scams from the platform, we’re met with exhausted responses akin to “what’s the point?” and “I just don’t bother reporting anything to Facebook anymore.”

Sponsored Content. Continued below...

Facebook has long seemed to employ a “critical mass” approach to most user-generated reporting. Should a high enough number of people report a particular piece of content, it may get reviewed by a human moderator. For everything else, the report is “reviewed” by robots. That is to say, Facebook’s technology attempts to determine if that content goes against Facebook’s community standards. And the conclusion is often one that is patently an error, which is hardly surprising given Facebook’s antiquated reporting categories.

Could Facebook’s technology have determined that “Ryan” made the same post to dozens of other social media users on the same Facebook post? Could the technology see that “Ryan” was using a profile picture and cover photo of someone else, someone in the military? Could the technology determine that the unsolicited request to connect with someone on Facebook was out of place, if not downright suspicious in itself? Probably not.

Did Facebook’s technology even know what it was looking for, since it was equipped only with the vaguest of details; that this was reported as ‘a scam’?

Facebook appears to consciously rely on user generated reports to identify content that goes against Facebook’s Terms of Service, while simultaneously employing an archaic reporting system that will inevitably discourage users from reporting content under the belief it is an exercise in futility.

It is not a sustainable approach.

Sponsored Content. Continued below...

Nearly every authority monitoring online fraud is reporting mass surges in scams since the pandemic, with an estimated £2.3 billion lost since 2020, and many note that Facebook specifically is a prolific conduit.

Which in turn begs the question; how long can Facebook continue to operate with its current ineffective approach to scams. Perhaps when the punitive action taken against Facebook hits their wallet to some meaningful degree. Or perhaps when enough users – the very reason why Facebook is such a profitable enterprise – flee the platform and the scams that come hand-in-hand with it. Perhaps whichever comes first.

In the meantime, Ryan is free to send his requests to other Facebook users until he recruits a victim and scams them out of money. And we wonder what Facebook’s response would be to that victim, that not only did another one of their user’s get scammed on their platform, but the scammer had been reported to Facebook and they still allowed them to continue operating unimpeded?

While moderating countless reports each and every day is certainly a challenge, Facebook’s system is demonstrably not fit for purpose, and based on what we see every day, it’s only going to get worse before some tipping point is reached. The pertinent question is what that tipping point may be.

Continued below...

Thanks for reading, we hope this article helped, but before you leave us for greener pastures, please help us out.

We're hoping to be totally ad-free by 2025 - after all, no one likes online adverts, and all they do is get in the way and slow everything down. But of course we still have fees and costs to pay, so please, please consider becoming a Facebook supporter! It costs only 0.99p (~$1.30) a month (you can stop at any time) and ensures we can still keep posting Cybersecurity themed content to help keep our communities safe and scam-free. You can subscribe here

Remember, we're active on social media - so follow us on Facebook, Bluesky, Instagram and X